When people think about making AI more capable, the common strategy is simple: make the model bigger. Larger models, more parameters, more compute. That’s been the dominant strategy for years.

But what if there’s another way?

In this post, I’ll walk you through research from NVIDIA, Google, DeepSeek, Camel, and our own that shows a different path forward. A path where small models working together can beat the biggest foundation models.

And to make this clear, I’ll break the post into three parts:

The history and context behind scaling AI.

Examples of horizontal scaling in action.

Our experiment that hit #1 on the GAIA Benchmark for verified systems.

The Bitter Lesson: Why Bigger Worked

Rich Sutton’s famous “Bitter Lesson” tells us that progress in AI comes less from human-crafted expertise and more from computation. The history of chess computers is a perfect example: carefully designed rule-based engines gave way to brute-force compute and data-driven approaches.

But here’s the question I am asking today: have we scaled vertically enough?

Scaling Horizontally: From One Giant to Many Specialists

Instead of making a single model bigger, what if we built systems of many smaller agents, each specialised and working together like humans in a team?

That’s what we call scaling horizontally.

Think about it: society works because individuals specialise and collaborate. Why shouldn’t AI systems do the same?

Example 1: Role-Playing with CAMEL-AI

CAMEL-AI was one of the first experiments to test this idea with LLM based multi-agent systems. Two agents role-play one as a “user” and one as an “assistant.”

Instead of solving a task alone, they collaborate through conversation. And it works: CAMEL-AI

showed better results than a single model working in isolation.

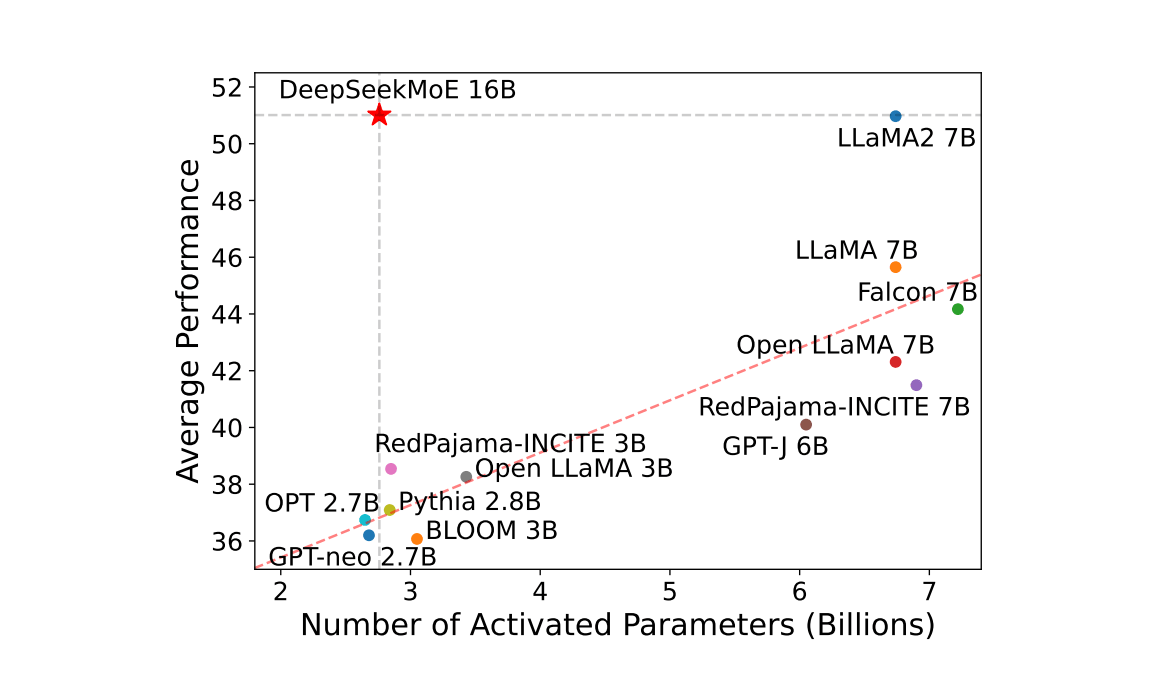

Example 2: Mixture of Experts with DeepSeek

DeepSeek took a different approach also with Mixture-of-Experts (MoE).

Here, instead of one giant LLM, the system contains many smaller “expert” models. A router decides which expert to activate for each task.

The result?

DeepSeekMoE outperformed other models of similar size because each expert specialised. Instead of every parameter working on every input, the model routes work to the right experts.

Example 3: Heavy Grok AI as a Study Group

Elon Musk’s team introduced Heavy Grok, which spawns multiple agents in parallel. Each works on a problem independently, then they compare notes like a study group.

Sometimes only one agent cracks the trick but once shared, all benefit.

This is horizontal scaling in action.

Example 4: OWL by CAMEL

Another approach is

OWL from CAMEL, where you have a coordinator agent distributing work to specialised agents like a web agent, coding agent, or document agent.

This is closer to how teams work in organisations: one coordinator, many specialists.

Example 5: Coral Protocol – Graph of Agents

We ran an experiment that pushed this concept further.

Instead of relying on a single router or coordinator, Coral organises agents into a graph.

Agents can start threads, mention each other, and form human-like conversations. This graph-style communication reduces bottlenecks and allows many more agents to coordinate effectively.

Putting It to the Test: GAIA Benchmark

So does this actually work?

We tested our Coral system on the GAIA Benchmark.

GAIA problems are intentionally hard for AI but easy for humans, requiring reasoning, search, tool use, and planning.

Here are the results:

Our agents scored 60% overall with small models, beating Microsoft’s Magnetic-UI (42%). What’s even more interesting is that we also outperformed other systems running large models, such as Claude 3.5 and 3.7.

At Levels 1 and 2, Coral outperformed larger models. On Level 3, the biggest models still held an edge likely because they can improvise when tools are limited. But the key insight remains: horizontal scaling lets small models punch above their weight.

Why This Matters

The arms race of building bigger models is costly, risky, and centralised. Horizontal scaling offers an alternative:

Faster → smaller models run cheaper and respond quicker.

Safer → easier to interpret, monitor, and control.

Scalable → new agents can be added like Lego blocks.

And most importantly: it works. Our GAIA benchmark results prove it.

It not only shows that you can scale agents with small models to increase capability against other small models, but we might also be able to scale past what we thought was possible with large models.

Everyone’s racing to build the biggest, smartest model. But the future of AI might look more like human society: a network of specialists, collaborating through protocols.

Explore the full GAIA results here: https://gaia.coralprotocol.org/

Thanks for reading!

Feel free to connect with me or check out some other work I have done.

X/Twitter: https://x.com/omni_georgio