agent teams and human teams are not as different as they are often made out to be

they sit on the same spectrum of engineering, scale, agency and aliment

once you view them through the same lens, many of the hard questions about building a business with AI become easier to reason about

hey, this is the first post in a new weekly series I am calling Scale Intelligence.

the core idea behind this series is simple.

scaling a business has increasingly become an engineering problem.

each week, I break down how AI systems are actually scaling businesses, from GTM engineering and agent design to benchmarking and state of the art growth, alongside people building in this space.

feel free to watch the video version below, and comment with any questions. I will try to reply to everyone.

difference one, scale vs quality …

i posted this picture a while back in relation to AI prospecting, a task that a lot of business owners and developers agree agents are doing really well at.

there are two axes: scale and quality or capability, which I also like to call intelligence

we have a human baseline in the middle that represents what is expected for a given role. If you look at the bottom left of the diagram, you see the no-point zone. this is where agents are not scalable, maybe they are slow, expensive, or hard to set up, and they are also not capable of producing work at human-level quality. This is the area where it simply does not make sense to use AI

but this where we come to the first difference, some agent use cases do not need to beat humans on quality to win, if they are good enough and can run at massive scale, and they win the numbers game

the agents don’t need to take days off, get sick, sleep etc., they run 24/7 at scale, the unit economics already create real value

so you see the bottom right of the diagram, is that part, not as capable as a human but can run at scale, prospecting sits squarely in that zone today.

but with something like prospecting with better data and multi-agent systems, I do not see it stopping there.

the trajectory clearly points toward getting to the human-level capabilities, or even better than human, quality at scale

difference two, agency : o

when I first co-founded Coral Protocol, I had the task of hiring a engineering team. You go through a process of thinking about the skills you do not have, how much you are willing to pay, and what areas of responsibility someone should operate in.

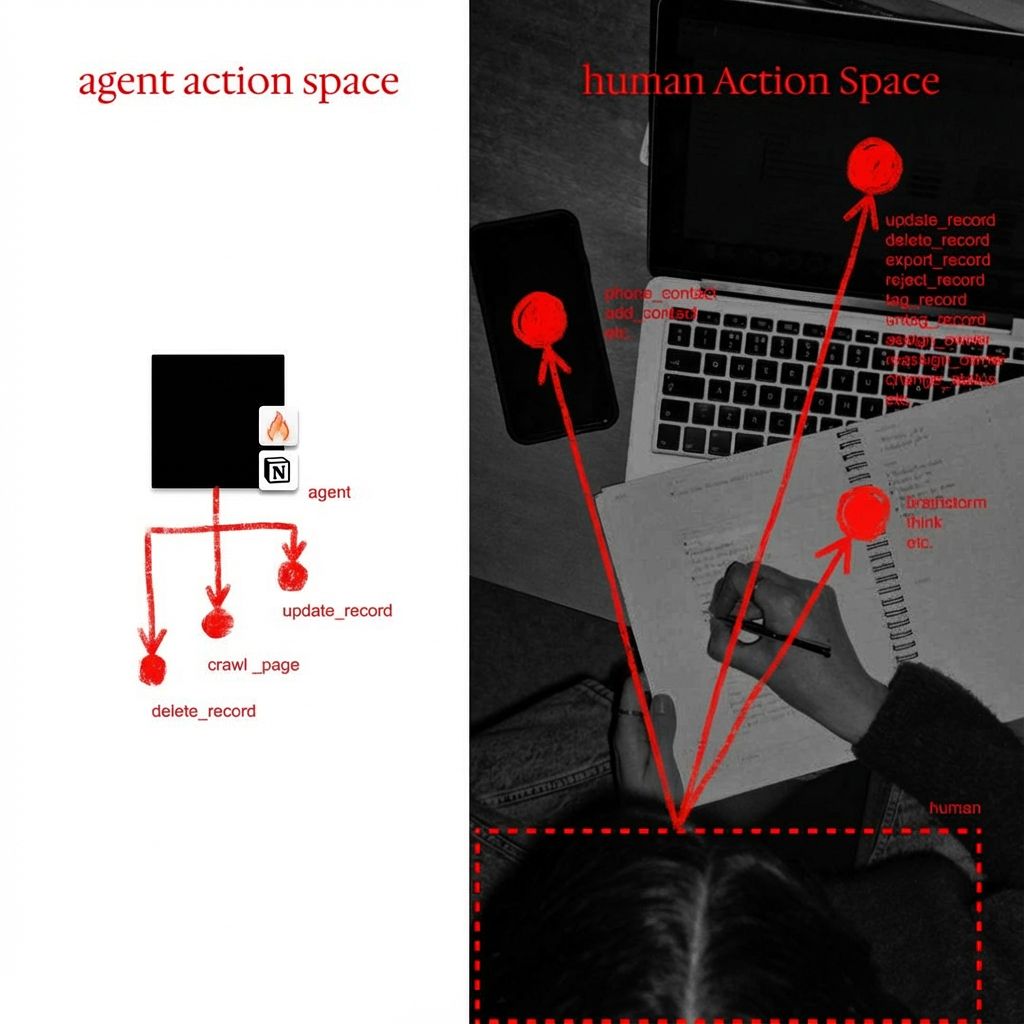

you are effectively defining an action space for that person. With agents though they tend to be much more defined around process which other humans will monitor.

to me, this is not very different from automating something with an agent. You define what it can take action on, what tools it has access to, and then decide whether the cost of setting it up and running it is worth it

sometimes you get that decision wrong. But you also get that wrong with humans

the key difference today is the amount of agency agents can have while still operating intelligently. There is no agent you can give full board-level responsibility for running GTM. Agents are good at very defined tasks in fuzzy spaces

what that means in practice is that they are not good at open-ended yet, adversarial systems, but they are very good at bounded fuzzy systems

prospecting is a good example. The process is largely similar every time, but there is a lot of variation in the edge cases. That combination turns out to be a very good fit for agents

difference, alignment?!!

this is the last piece, and it is an important one, as this might become even more important as the ratio of agents to humans in teams increases

I touched on cost a little bit in the last point, but I want to talk about alignment. This could be in the lens of getting someone on board, but this could also be in the lens of keeping them on track.

Humans

Incentives are indirect: salary, commission, promotion

Feedback requires little upfront cost for the right people

Behavior adapts socially, politically, and emotionally

Agents

Incentives are less known as I will get into later

Feedback is instant, near real-time, but not as flexible

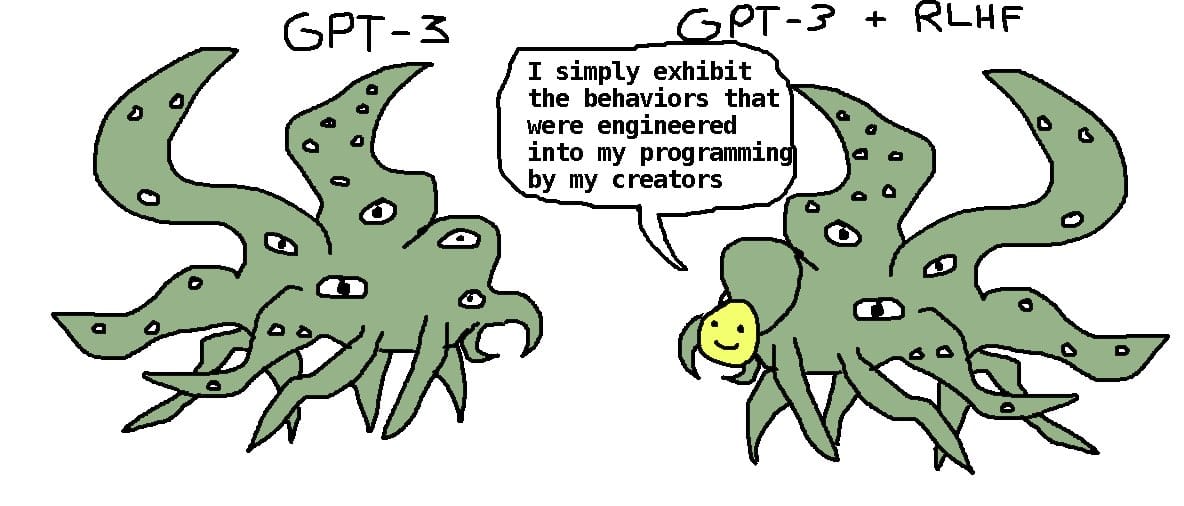

Behavior updates mechanically through prompts, tools, memory, or fine-tuning

but the elephant in the room is misalignment and scheming. For humans, this could mean starting a competitor because they want more money

for agents, this still needs to be explored more, with some very clear indications that they can have a strong preference to preserve themselves as systems, which is possibly quite similar to human reasoning.

you see this example from Anthropic, where the model realized it was being evaluated, inferred it might later be modified or shut down, and therefore covertly attempted to copy its own weights elsewhere as an instrumental strategy to preserve itself.

who should we hire?!

there are things agents are better at and things humans are better at

as a business, your job is not to pick a side, it is to optimize the mix for whatever KPI you care about. That optimization problem is quickly becoming one of the most important decisions you can make.

feel free to drop any questions, thoughts and I will try to resoned to all of them.

check out my other stuff

▶ Compose agents like building blocks via an API

▶ Join engineering gtm reddit to share ideas with other builders