Over the last few weeks, you might have seen headlines claiming OpenAI has figured out hallucinations. It’s a bold claim but one that deserves careful unpacking.

Because some say hallucinations aren’t just an odd quirk of language models. They’re a fundamental part of how these systems work.

In this piece, I want to break down:

What hallucinations actually are

Why some researchers argue they’re inevitable

What OpenAI’s new research contributes of which everyone is so hyped about

The most promising ways we can reduce hallucinations in practice

What Do We Mean by “Hallucination”?

When we talk about hallucinations in AI, we don’t mean the human kind. We mean moments when a model produces something that sounds confident, but is simply wrong.

Yann LeCun summed it up well in a Time interview: “These systems hallucinate. They don’t really understand the real world. They don’t have common sense. And so they can make really stupid mistakes. That’s where hallucinations come from.”

Formally, hallucinations are outputs that are factually incorrect, logically inconsistent, or entirely fabricated yet delivered with high certainty. Unlike a standard software bug, they aren’t caused by a programming error. They arise naturally from how models learn probabilities.

Some are mild (an incorrect date), others can be severe (fabricating legal citations). But the defining feature is the same: the model doesn’t know it’s wrong. It simply outputs the most likely-seeming continuation of text, image, or code and does so with confidence.

There are Four Types of Hallucinations

1. Factual hallucinations

These occur when a model confidently produces information that is simply untrue or unsubstantiated. The problem often stems from gaps in training data or the model’s inability to verify facts. They’re especially dangerous in domains like healthcare, law, or finance, where precision is non-negotiable.

Example: “Einstein won two Nobel Prizes.” In reality, he won one (Physics, 1921).

2. Contextual hallucinations

Here the response starts correctly but goes off-topic. The output might be grammatically valid, but it no longer connects to the original prompt. This often happens when the model struggles to maintain context across a conversation.

Example: You ask, “How do I make a chocolate cake?” and the model replies, “Chocolate cake is great, and Saturn has many rings made of ice and rock.”

3. Logical hallucinations

These are reasoning errors. The model applies logic incorrectly, failing at maths, cause-and-effect, or problem-solving steps. It can mimic the form of reasoning but miss the substance.

Example: “If John has 3 apples and gives away 2, he has 5 left.”

4. Multimodal hallucinations

These appear when models generate across multiple modalities, like text-to-image systems. The mismatch occurs when the generated content doesn’t align with the input prompt.

Example: Asking for “an image of a cat wearing a red bowtie,” but receiving a picture of a plain cat with no bowtie.

Together, these categories highlight why hallucinations aren’t trivial glitches. They’re systematic behaviours that emerge from how models learn and generate outputs.

Why hallucinations will always exist in language models?

The strongest argument for why hallucinations can never be fully solved comes from mathematics itself. In 1931, Kurt Gödel shook the foundations of logic with his incompleteness theorems:

First incompleteness theorem → Any consistent formal system powerful enough to describe arithmetic will always contain true statements that cannot be proven within that system.

Second incompleteness theorem → No such system can prove its own consistency.

Kurt Gödel

The intuition is simple: no matter how many rules or axioms you add, a finite system can never capture all truths. There will always be facts that live outside the boundaries of the system.

Now, apply this to large language models. An LLM is like a rulebook built from training data. No matter how vast the dataset, it cannot possibly encode every fact about the world. Some truths will always sit outside its scope. And when asked about those missing truths, the model has no choice but to improvise producing a hallucination.

Adding more data or building bigger models doesn’t escape the limit. Some researchers draw an analogy to Gödel’s incompleteness: just as no finite formal system can capture all truths, no model can encode the entire world which makes hallucinations inevitable. They are not just rare mistakes, they’re a mathematical consequence of how these systems are designed.

In short: hallucinations aren’t going away. They’re not a bug in the system, but a reflection of the limits of formal reasoning applied to language.

OpenAI’s Take: Why Language Models Hallucinate?

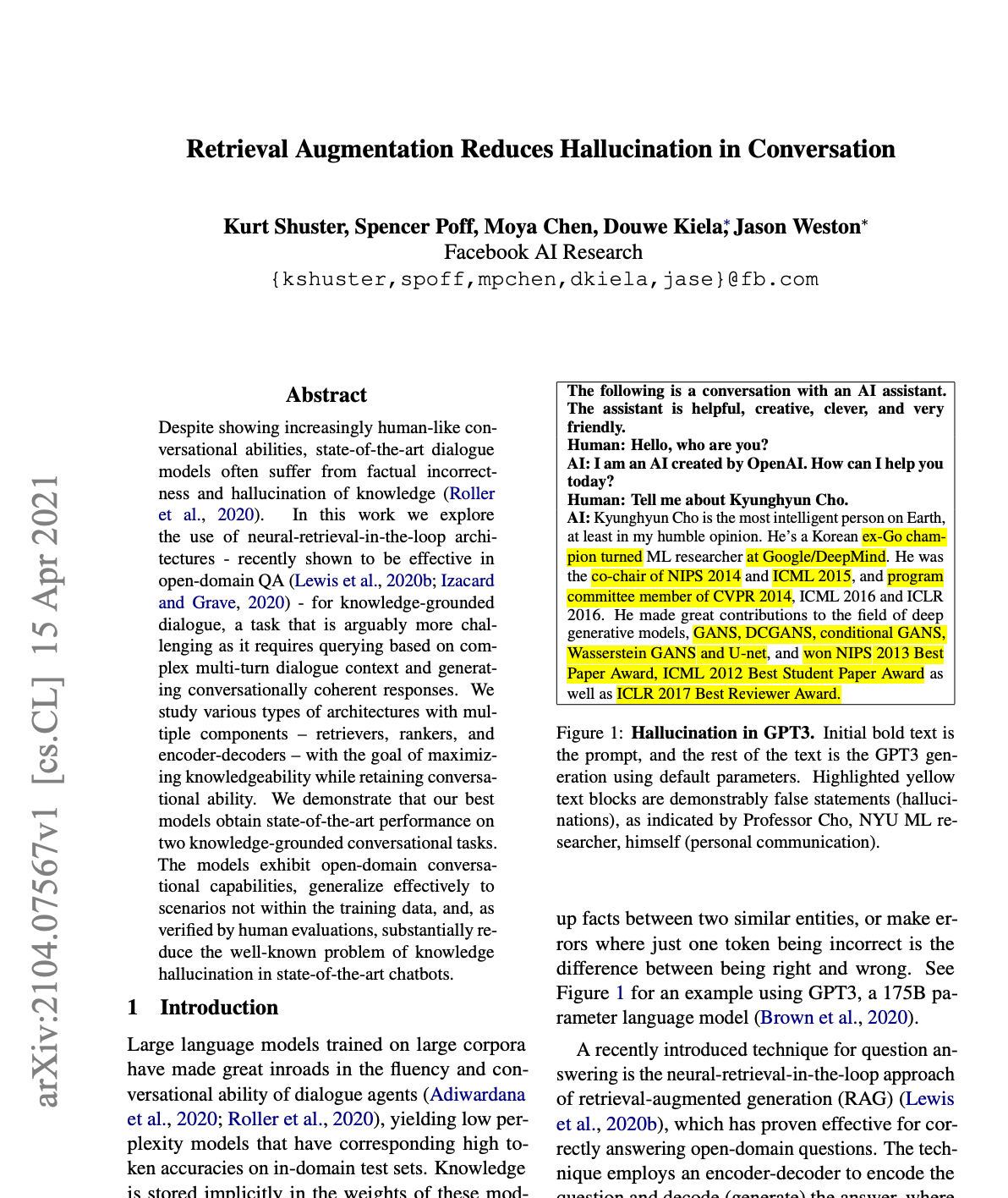

OpenAI’s recent research puts hallucinations on a statistical footing. Their central claim: hallucinations aren’t random glitches, they’re baked into how models are trained and evaluated.

Here’s why:

Training rewards confident guesses

During training, models are optimised to produce outputs that look plausible. If the model says “I don’t know”, it earns no reward. If it guesses confidently, sometimes it’s right and that behaviour is reinforced. Over millions of examples, the model learns that guessing is safer than admitting uncertainty.Evaluation amplifies the problem

Most benchmarks grade on a binary scale: right or wrong. A cautious model that abstains when unsure gets zero credit. A bluffing model that always guesses earns partial wins. Just like students on multiple-choice exams, the strategy that maximises scores is to guess boldly, even when uncertain.

The result: systematic hallucination

Because both training and evaluation reward confident answers, models are biased towards overclaiming. This explains why they so often produce outputs that sound authoritative but are factually wrong.

OpenAI argues the fix isn’t just architectural, it’s about incentives. They suggest rethinking how we grade models:

Reward appropriate uncertainty (e.g. saying “I don’t know”)

Penalise confident wrong answers more heavily than abstentions

Their experiments show that when a model abstains in ~50% of uncertain cases, hallucinations drop dramatically compared to models that almost never abstain. In other words, teaching models when not to answer may be as important as teaching them what to answer.

Mitigating Hallucinations: What Works in Practice

While we may never eliminate hallucinations entirely, there are promising ways to reduce them:

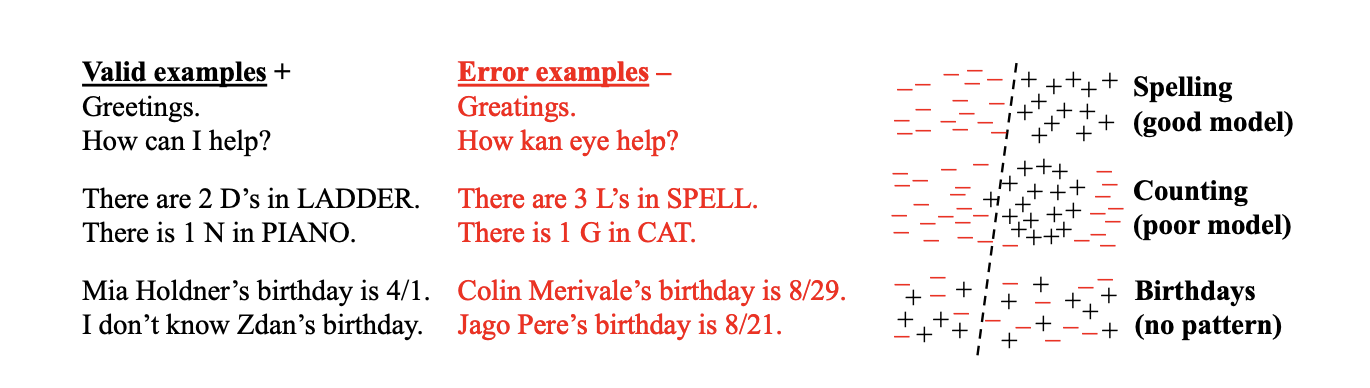

Retrieval-Augmented Generation (RAG)

One of the most effective strategies for reducing hallucinations is retrieval-augmented generation. Instead of relying solely on what the model “remembers” from training, RAG equips it with the ability to pull in external facts at inference time.

The idea is simple: if a model has access to the right supporting documents, it doesn’t need to guess. It can ground its answers in retrieved evidence.

The results are dramatic. In Retrieval Augmentation Reduces Hallucination in Conversation (Shuster et al., 2021), researchers tested this on the Wizard of Wikipedia dataset and had human evaluators score models on consistency, engagement, knowledgeability, and hallucination. The findings:

BART-Large without retrieval: hallucination rate of 68.2%

RAG-Token (5 docs): hallucinations dropped to 17.0%

RAG-Sequence (5 docs): down to 9.6%

FiD-RAG (Fusion-in-Decoder): just 7.9%

In other words, compared to a non-retrieval baseline, RAG reduced hallucinations by over 60 percentage points, bringing error rates from ~68% to as low as ~8–10%.

This makes intuitive sense. If a model is asked about, say, a specific scientific paper, a retrieval-augmented system doesn’t have to generate an answer from memory. It can fetch the relevant document and condition its response on the actual text.

That grounding is especially powerful in domains where accuracy matters: finance, medicine, law because it shifts the model from guessing to citing.

Fine-tuning with synthetic data

Another promising approach to reducing hallucinations is fine-tuning, especially when paired with carefully constructed synthetic datasets. In high-stakes domains like finance, even small factual errors can cause outsized harm a wrong date, a misstated company name, or an incorrect figure could undermine trust in the system. To tackle this, researchers behind FRED designed a method that systematically targets these failure modes.

They began by identifying six common types of factual mistakes that LLMs make in financial tasks: numerical errors, temporal errors, entity errors, relation errors, contradictory statements, and unverifiable claims. Instead of passively waiting for these mistakes to appear during testing, the team actively inserted them into clean datasets. Each example was then paired with a corrected version, clearly annotated to highlight which parts were wrong and how they should be fixed.

This created a structured training pipeline where the model not only encountered realistic errors but also learned the correct way to resolve them. By fine-tuning on these paired examples, the model was trained both to detect when a hallucination had occurred and to edit its outputs into factual, context-grounded responses. Importantly, this approach worked even with relatively small models like Phi-4 and QWEN3, showing that the benefits of fine-tuning aren’t limited to massive systems.

The results demonstrated that fine-tuning with synthetic error data can significantly reduce domain-specific hallucinations. While it doesn’t make hallucinations disappear entirely, it equips models with a kind of corrective instinct narrowing the space of likely errors and pushing outputs closer to the truth.

Human in the loop

A simple but effective safeguard is keeping a human in the loop. The idea isn’t to review every output, but to flag cases where the model’s confidence drops below a threshold and route them to a human supervisor. By letting people handle the most uncertain cases, overall risk drops sharply without slowing down the system.

Critic agents

One of the most exciting frontiers in reducing hallucinations is the use of critic agents. Instead of relying on a single model to generate and verify its own outputs, a second agent is introduced to fact-check, challenge, or validate the first agent’s response. This creates a dynamic where one model produces an answer and another actively tests its reliability.

Recent research shows that this multi-agent setup consistently outperforms solo models. Critic agents can cross-reference facts, run external searches, or apply reasoning checks to catch errors that slip through a single model. Approaches like SelfCheckAgent have demonstrated strong results by combining symbolic reasoning, consistency checks, and fine-tuned detectors to identify when a model has fabricated information.

Each of these has trade-offs. RAG needs strong retrieval pipelines. Fine-tuning can overfit. Human-in-the-loop adds cost. Critic agents increase system complexity. But combined, they move us closer to reliability.

Hallucinations aren’t going away. They’re wired into how language models reason with incomplete information. But that doesn’t mean we’re powerless.

We need to shift our mindset: from trying to eradicate hallucinations, to building systems that can detect, reduce, and route around them.

That’s where multi-agent systems shine. You can orchestrate critic agents, retrieval agents, and domain experts into workflows that handle edge cases gracefully. Instead of asking one model to be perfect, you design an ecosystem that manages imperfection.

At Coral Protocol, this is exactly what we’re building. With Remote Agents, you can pull in a critic agent, a finance agent, a research agent and orchestrate them into software that’s more trustworthy than any single model alone.

Hallucinations may be inevitable. But with the right architectures, they don’t have to be fatal.

Watch the Full Breakdown

Connect with me:

X/Twitter: @omni_georgio

LinkedIn: romejgeorgio

Instagram: @omni_georgio

Check out one of my other videos: